Jump To Section

- 1 The Regulatory Landscape for Artificial Intelligence in the Financial Sector

- 2 Challenges with AI Adoption in Financial Institutions

- 3 Robust AI Governance Frameworks

- 4 AI Auditing and Assurance Mechanisms

- 5 Risk Management in AI-Driven Operations

- 6 Real-World Case Studies and Industry Benchmarks for AI Governance

- 7 Final Takeaways: Strategic Recommendations for AI Governance in Financial Services

The biggest risk in adopting artificial intelligence isn’t the looming threat of regulation but the failure to govern AI effectively.

Shockingly, over 80% of AI initiatives collapse, not due to technological shortcomings but because governance is treated as an afterthought rather than a strategic imperative. This failure rate nearly doubles that of traditional IT projects, revealing a critical blind spot in how organizations approach AI deployment.

In this article, we explore why robust AI governance in financial services is the linchpin for transforming AI from a risky experiment into a powerful asset. You’ll discover how financial institutions can navigate complex regulations in the AI landscape, mitigate operational and ethical AI risks, and harness AI responsibly to unlock operational efficiency, unprecedented innovation and competitive advantage.

If you want to ensure your AI initiatives succeed and avoid becoming another statistic, understanding effective AI governance is non-negotiable. Read on to learn how to build a governance framework that not only safeguards your institution but propels it forward in the rapidly evolving financial services industry.

The Regulatory Landscape for Artificial Intelligence in the Financial Sector

The financial sector is rapidly embracing Artificial Intelligence (AI), with generative AI leading a wave of transformation. By 2026, most large financial institutions are expected to adopt AI-driven platforms to improve agility, insight quality, and strategic decision-making.

Current governance models are often unprepared to manage the complexity and scale of these technologies. At the same time, regulatory momentum is accelerating globally, with frameworks like the EU AI Act and Canada’s AIDA signaling stricter oversight ahead.

In the U.S., there’s currently no single federal AI law, but sector-specific rules and agency guidance are emerging. New York City has introduced AI bias audit requirements, while federal bodies like the FTC and EEOC are increasingly scrutinizing AI use. The Biden administration’s 2023 Executive Order emphasizes safe and trustworthy AI development and hints at future audit standards.

In Canada, the Artificial Intelligence and Data Act (AIDA) focuses on regulating high-impact AI systems, especially in government use, setting the stage for broader adoption in the private sector.

Looking ahead, the EU AI Act set to take effect by 2026 will serve as a global model, especially for firms with international standards and operations. It imposes strict regulatory requirements for high-risk systems, like those in the financial sector, and introduces significant penalties for non-compliance.

These developments signal a clear trend: financial institutions must strengthen their AI governance, implement auditing tools, and proactively manage risks. As expectations rise for transparency, fairness, and explainability in AI systems, organizations that act early will be better positioned to comply, innovate safely, and stay competitive.

Challenges with AI Adoption in Financial Institutions

As AI adoption accelerates, financial institutions across North America are grappling with a range of challenges, technical, regulatory, and operational, that must be addressed to ensure safe and effective deployment.

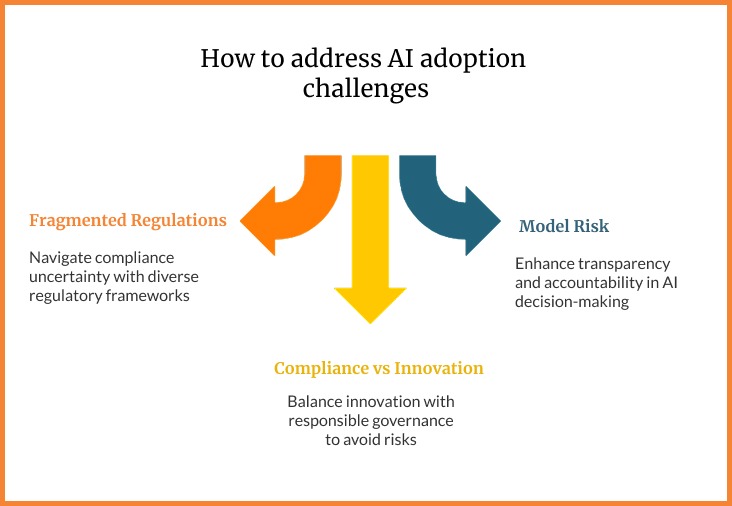

Fragmented Regulations and Standards

The regulatory environment is a patchwork. The U.S. lacks a unified federal AI law, relying instead on sector-specific rules and voluntary frameworks, while Canada’s AIDA sets standards for high-impact systems. Meanwhile, the EU’s AI Act, coming into force by 2026, will influence global practices. Without consistent and universally accepted audit standards, financial institutions face compliance uncertainty and risk inconsistent oversight.

Model Risk and Explainability Gaps

GenAI introduces new layers of complexity. These systems can generate content from diverse, unstructured data making them prone to inaccuracies, hallucinations, and bias. Traditional governance frameworks often struggle to track or explain how these AI models make decisions, creating transparency and accountability risks in sensitive areas like credit and fraud detection. IP concerns and improper data use further compound the issue.

A detailed guide to creating a modern data strategy can be found here: Modern Data Strategy to Transform Chaos into Growth

Compliance vs. Innovation Dilemma

Firms are under pressure to innovate, but fear overregulation could stall progress. Still, research shows that strong, well-defined oversight supports sustainable innovation. Leaders must balance rapid AI adoption with responsible AI governance, avoiding shortcuts that could expose their organizations to legal, ethical, or reputational harm.

Navigating these multifaceted challenges requires a comprehensive and adaptive approach to AI governance. Financial institutions that proactively address regulatory fragmentation, model risk, and the innovation-compliance balance will be better equipped to harness AI’s full transformative potential. Learn how to achieve improved, faster innovation that helps you stay ahead of the curve in this article: How Banks Can Stay Ahead of Fintech Disruptors with Faster Product Innovation

By embedding ethical principles, robust oversight, and integrated risk management into their AI strategies, organizations can turn these challenges into opportunities, ensuring not only compliance but also sustainable growth and competitive advantage in the evolving financial services landscape.

Robust AI Governance Frameworks

As generative AI reshapes financial services, robust governance is essential to ensure responsible, ethical, and compliant deployment. Financial institutions must build an AI governance structure that balances innovation with accountability.

Proposed governance structure

To manage the growing complexity of GenAI, financial institutions are adopting more flexible and layered governance models:

- Centralized AI Committees oversee early-stage adoption, with many evolving into specialized subcommittees for different risk areas (e.g., legal, compliance, technical).

- Integration with Enterprise Risk Models is key—AI risks (technical, legal, operational) must be embedded within broader risk governance frameworks like Model Risk Management (MRM).

- Human Oversight remains vital, especially for high-stakes applications. Experts must monitor outputs, redact sensitive customer data, and run structured tests using curated “golden lists.”

- Board-Level Involvement ensures accountability and legal preparedness. Yet, only 18% of firms report having an enterprise-wide AI council, indicating a gap in structured oversight.

- Some organizations are experimenting with novel structures, like public benefit corporations and long-term safety trusts, to insulate governance from short-term profit pressures.

Ethical AI principles and policy development

Strong ethical practices and foundations support sustainable AI use. Institutions must:

- Address Algorithmic Bias by auditing training data and testing models for discriminatory outcomes—especially in areas like credit scoring or fraud detection.

- Emphasize Explainability, not just transparency. Audits should go beyond technical disclosures to ensure AI-driven decisions can be clearly understood and justified.

- Protect Rights and Fairness by ensuring AI does not mislead users or compromise access to services. Policies should align with both legal requirements and social responsibility.

- Global trends like the EU AI Act, Canada’s AIDA, and the U.S. AI Bill of Rights reflect increasing expectations for transparency, non-discrimination, and ethical safeguards––standards financial institutions must prepare to meet.

Integration with enterprise risk management (ERM)

AI governance must be embedded within core enterprise risk processes:

- Update MRM Standards to include GenAI-associated risks such as dynamic inputs, evolving outputs, and multistep processing.

- Use AI Risk Scorecards to prioritize oversight based on customer exposure, model complexity, financial impact, and legal/ethical considerations.

- Implement a layered control framework:

- Business Controls: Enable governance flexibility without stifling innovation.

- Procedural Controls: Streamline approval and review processes for AI deployment.

- Manual Controls: Include human validation of outputs and user feedback loops.

- Automated Controls: Use AI tools for real-time data monitoring, compliance flagging, and vulnerability scanning.

- AI technologies like Retrieval-Augmented Generation (RAG) can help enforce stricter safeguards for customer-facing systems and improve supplier risk management.

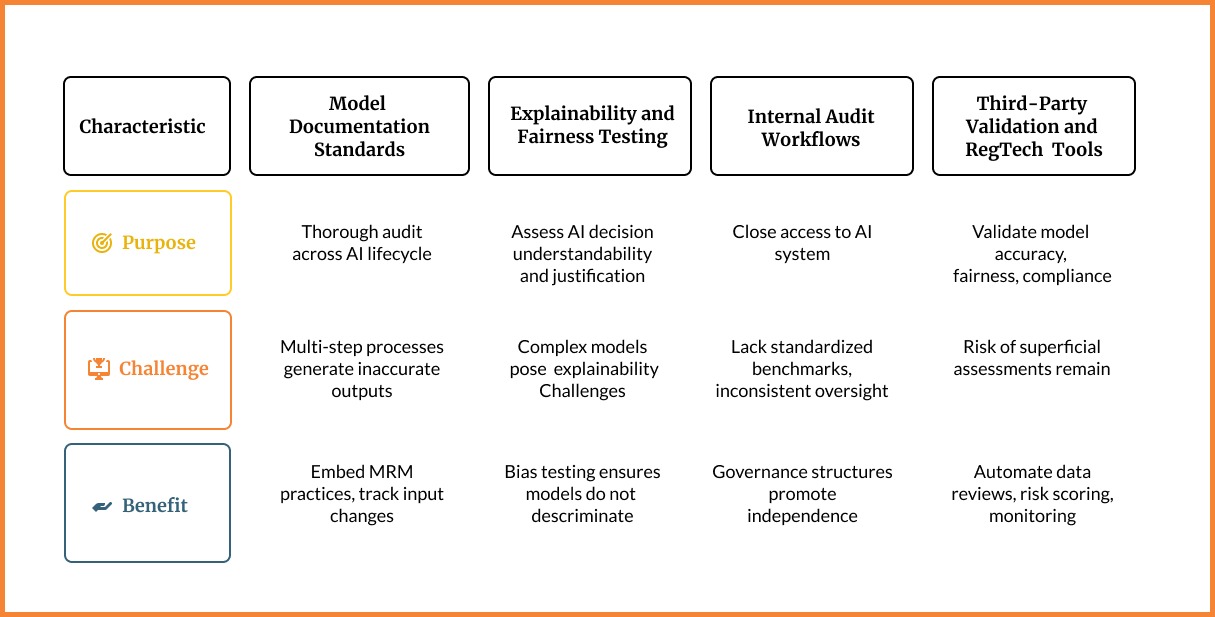

AI Auditing and Assurance Mechanisms

As generative AI becomes more deeply integrated into financial services operations, robust auditing and assurance mechanisms are essential for managing potential risks, ensuring fairness, and maintaining regulatory compliance. Financial and banking institutions must evolve beyond traditional risk frameworks to address the unique demands of AI technologies like machine learning and GenAI.

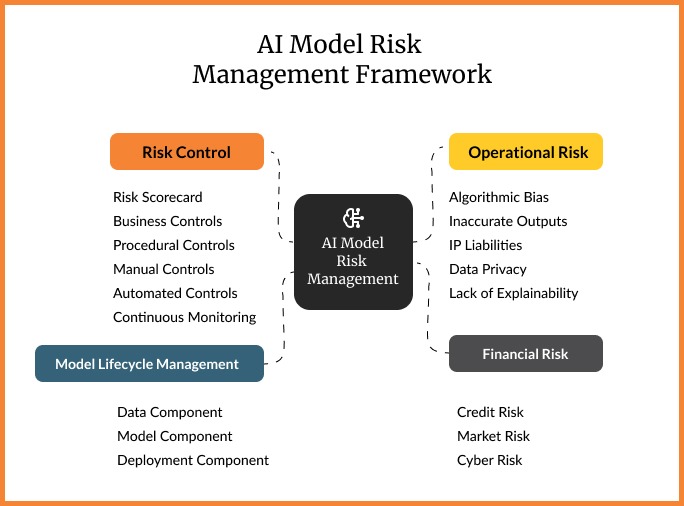

Risk Management in AI-Driven Operations

As financial institutions integrate Generative AI (GenAI) into operations, the traditional frameworks for managing operational, credit, market, and cyber risks must be enhanced.

GenAI introduces unique challenges due to its ability to generate new content using public, private, and multimodal data, which raises concerns about misuse, inaccuracy, and accountability. Institutions must embed AI-specific governance into their broader Enterprise Risk Management (ERM) systems.

| Risk Category | Key Risks | Recommended Controls / Responses |

| Operational Risk | • Algorithmic bias | AI systems trained on biased data can unintentionally produce discriminatory outcomes, especially in critical areas like credit scoring, fraud detection, and hiring. Regulatory efforts, such as New York City’s mandated bias audits, are aimed at curbing this risk. |

| • Hallucinated outputs | GenAI may generate factually incorrect or misleading information, potentially affecting customer trust and regulatory compliance. | |

| • IP infringement | AI tools may inadvertently generate or expose proprietary content, such as licensed code or sensitive business logic, increasing IP risk. | |

| • Privacy breaches | GenAI’s reliance on vast datasets elevates the risk of data leaks or misuse. Responsible use requires secure handling of public and private data, particularly to avoid unauthorized exposure of sensitive information. | |

| • Lack of explainability | Many AI systems lack clear decision-making transparency. Explainability providing understandable reasons for decisions, is critical for accountability but remains difficult to implement, especially in complex models. | |

| Credit, Market, and Cyber Risk Extensions | • Discriminatory credit models | Bias in credit scoring algorithms may lead to discriminatory lending practices or inaccurate default predictions. Model drift and poor data quality can also affect fairness. |

| • Market model drift | The rapid proliferation of AI technologies could shift market dynamics, requiring firms to stay adaptive to maintain a competitive edge. | |

| • AI-powered cyberattacks | AI systems are high-value targets for cyberattacks. Secure data storage, encrypted communication, and controlled access are essential. Additionally, adversarial actors may use AI to identify vulnerabilities or exploit model weaknesses. | |

| Risk Controls and Monitoring Strategies | • Gen-AI Risk Scorecard | A tool to assess and prioritize risks based on customer exposure, model complexity, financial impact, and ethical or legal concerns. |

| • Business Controls | Centralized or decentralized oversight structures (e.g., AI oversight committees and “GenAI accelerators”) provide adaptive risk management without slowing innovation. | |

| • Procedural Controls | Updated Model Risk Management (MRM) standards are essential to address gen-AI-specific risks like dynamic inputs and multi-step processing. | |

| • Manual Controls | Human oversight remains necessary for testing outputs, redacting sensitive data, and applying “golden lists” of test questions. User and employee feedback loops also support continuous improvement. | |

| • Automated Controls | AI tools can sanitize data, flag anomalies, and conduct vulnerability testing. Retrieval-Augmented Generation (RAG) applications improve response accuracy and help enforce privacy and compliance standards. | |

| • Continuous Monitoring | Real-time data tracking, transparency mechanisms, and automated reporting systems enable responsive risk management. | |

| AI Model Lifecycle Management (ModelOps) | • Data Component | Audits should identify bias, privacy issues, or irrelevant training data. Synthetic data also requires scrutiny to avoid amplifying harmful trends. |

| • Model Component | Beyond technical transparency, audits should ensure models are explainable. Auditors must investigate if complexity is genuine or obfuscated and test for unintended or biased behavior. | |

| • Deployment Component | Evaluation extends to people, processes, and policies around the model. This includes version control, regulatory response plans, and compliance with state or municipal laws. |

Real-World Case Studies and Industry Benchmarks for AI Governance

Understanding how leading financial institutions are implementing AI governance provides valuable insights and practical benchmarks. These case studies highlight real-world examples from top banks and financial firms that demonstrate effective strategies, robust controls, and innovative approaches to managing AI risks while maximizing benefits.

JPMorgan Chase: Generative AI with Strong Controls

JPMorgan Chase has rolled out its LLM Suite across more than 200,000 employees, enhancing productivity in client interactions, legal document review, and call-center support.

They pair GenAI with rigorous internal controls and human-in-the-loop oversight—restricting high-risk models to controlled use cases (e.g., limited to travel planning), while tightly monitoring data privacy and output accuracy. Proving their model works, JPMorgan credits GenAI with saving $1.5 billion via fraud prevention, trading, and credit decisions.

Wells Fargo: Fargo Chatbot with Governance Layers

Wells Fargo’s “Fargo” LLM-based chatbot, powered by Google’s PaLM 2, has handled over 20 million customer interactions since its 2023 launch. The bank wraps it in compliance controls and clear policies to separate low-risk customer tasks (e.g., transaction inquiries) from sensitive financial decisions.

Bank of America & Morgan Stanley: Controlled Rollout of AI Assistants

- Bank of America uses a four-layer AI framework with “Erica” (now powered by GenAI) handling personalization and risk testing. Emphasis is on top-down training, limiting hallucination risks.

- Morgan Stanley’s “Debrief” (GPT4-based) supports advisors by summarizing meetings and drafting emails; rollout included careful ongoing monitoring and user feedback loops to prevent erroneous outputs.

PwC Canada / Major Canadian Bank: GenAI Platform & Governance

PwC Canada guided a leading Canadian bank to deploy an enterprise-scale GenAI platform with unified governance and automated bias checks. Highlights include:

- Democratizing AI access to business users

- Centralizing model libraries, decision workflows, and external/internal data inputs

- Enforcing automated compliance with data privacy and bias policies

- Continuous model monitoring and retraining

Mastercard & Citigroup: Fraud Detection & Compliance via AI

- Mastercard uses its proprietary GenAI to scan 125 billion annual transactions, improving fraud detection by up to 300% and reducing false positives by 20%.

- Citigroup applied GenAI to parse and summarize 1,089 pages of new US capital regulations. The tool enabled the identification of over 350 distinct use cases, enhancing compliance throughput.

These case studies illustrate the tangible impact of strong AI governance frameworks in driving operational efficiency, regulatory compliance, and customer trust across the financial services industry.

Final Takeaways: Strategic Recommendations for AI Governance in Financial Services

The rapid adoption of AI brings both opportunity and risk.

As regulations tighten and risks multiply, organizations that see governance as a burden will find themselves constantly reacting to crises.

On the other hand, those that embed governance into their strategy will move faster, innovate safer, and earn the trust that drives long-term success. Key considerations for financial services leaders include:

1. Regulatory Momentum is Accelerating

As AI adoption grows, North American regulators are rapidly introducing frameworks (e.g., U.S. Executive Order on AI, Canada’s AIDA) that demand proactive alignment from financial institutions.

2. Robust AI Governance is Non-Negotiable

Effective governance, accountability, and auditability must be embedded into AI development and deployment to meet compliance expectations and manage reputational risk.

3. Institutions Must Operationalize AI Risk Management

Financial institutions must evolve traditional model risk management (MRM) to address GenAI-specific concerns, like data lineage, hallucinations, and explainability, with clear ownership and controls across the AI lifecycle.

4. Cross-Functional Collaboration is Key

Compliance, technology, risk, and legal teams must work in sync to create agile yet compliant AI systems, mirroring best practices already seen amongst the banking sector, like in JPMorgan Chase and RBC.

5. Strategic Advantage Lies in Responsible AI

Institutions that act now to build transparent, ethical, and regulated AI ecosystems will not only meet regulatory demands but gain customer trust and a sustainable innovation edge by 2026 and beyond.

Strong governance is no longer optional. It is the most reliable way to meet regulatory demands, avoid costly risks, and use AI to deliver measurable business outcomes

The leaders who act now, building transparent, ethical, and accountable AI frameworks, won’t just keep pace with change; they’ll set the pace for everyone else.