AI is no longer experimental in financial services. In 2026, it is a board-level priority, a standing budget line, and a core part of hiring plans. Most banks, insurers, and capital markets firms now have formal AI product roadmaps.

Yet despite the volume of initiatives, the business impact often feels underwhelming.

Pilots impress stakeholders and quietly stall. “AI-powered” features ship without clear ownership. Tension grows between speed, safety, and accountability. This is not a model problem or a tooling problem.

It is a product definition problem.

At its core, AI is exposing a long-standing issue in financial services product teams: shipping components without owning decisions or outcomes. The challenge is amplified by a hard constraint unique to finance: many outcomes must remain deterministic. Two plus two must equal four. Anything else breaks reconciliation, reporting, and trust.

Generative AI, by contrast, is probabilistic by design. It predicts likely outputs and will occasionally be wrong in ways that feel arbitrary.

The real question for 2026 is not how to “add AI,” but how to design AI products that deliver deterministic business outcomes where ambiguity is unacceptable, while still benefiting from probabilistic reasoning where it is safe.

Why do AI roadmaps in FS look busy but deliver little impact?

Usually, AI roadmaps optimize for visible activity (features, pilots, and demos) rather than ownership of business decisions and outcomes. They track motion, not responsibility.

Most institutions do not lack AI ideas. In fact, they usually have too many. Roadmaps quickly fill with initiatives like the following:

- A smarter agent desktop

- Better fraud detection

- Faster claim intake

- Automated KYC and AML reviews

- Personalized offers

- Chatbots for servicing

The issue is not ambition. It is structure.

The roadmap becomes crowded fast.

Many roadmaps are built around deliverables instead of decisions. They look productive because they contain constant motion, but activity alone does not equal value.

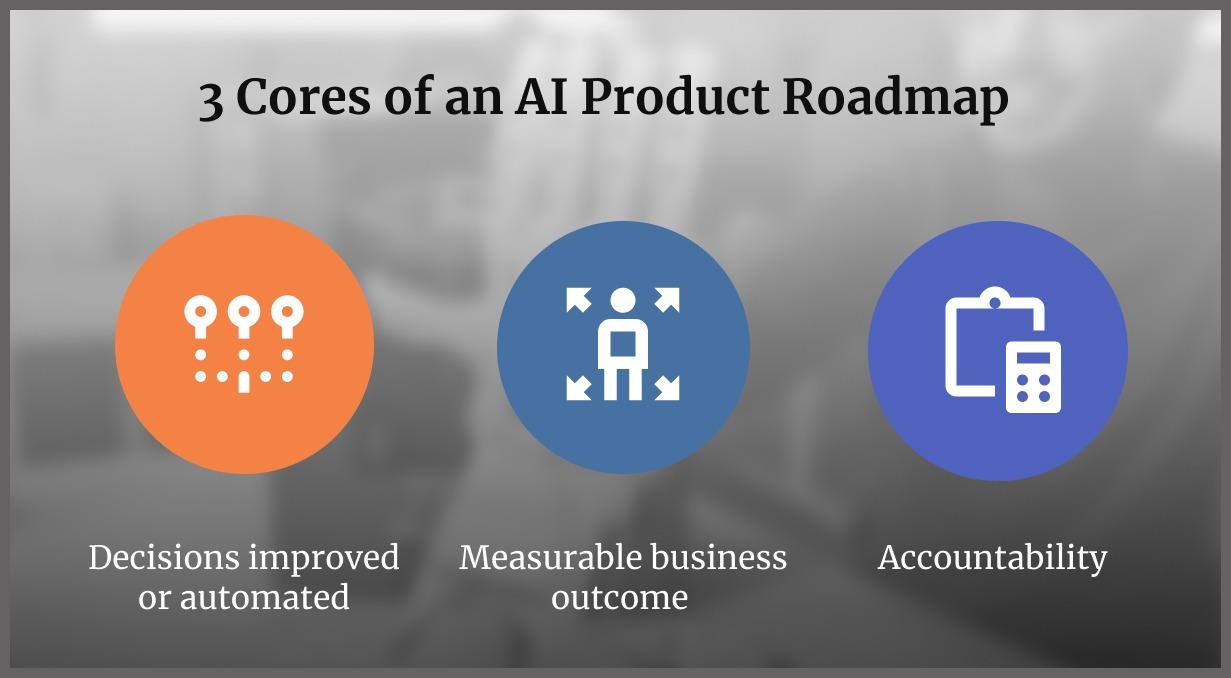

A credible AI product roadmap should answer three questions without hesitation:

- What decision will this product improve or automate?

- What measurable business outcome should change?

- Who owns the error when it goes wrong?

When those answers are missing, the roadmap becomes a catalogue, not a plan.

This pattern explains why many AI pilots appear successful but fail to scale, an observation based on repeated client programs across financial services.

Pilots run under protected conditions:

- Small volume

- Clean data samples

- Manual exception handling

- Friendly users

- No audit trail requirements yet

Then production begins and reality hits:

- Data is incomplete, inconsistent, or late

- Edge cases explode

- Model behavior shifts over time

- Users find shortcuts and workarounds

- Risk teams demand evidence and accountability

This is why many pilots “work” but do not scale. They prove a concept, not an operating model.

How initiatives labelled as “AI-powered” break product accountability

“AI-powered” describes a technique, not a product. It obscures who owns the decision, what constraints apply, and how failure is handled, making scale impossible.

Phrases like “AI-powered onboarding” or “AI-powered underwriting” sound modern, but they create dangerous ambiguity. They describe how something is built, not what it is responsible for.

A real product definition requires three elements:

- A clear responsibility

- A clear boundary

- A clear owner

Most “AI-powered” labels (“AI powered X, Y, Z”) usually have none of those.

A better framing names the job to be done, not the technique. Instead of “AI powered onboarding,” try:

“Reduce onboarding abandonment by making identity verification faster, while meeting policy and regulatory constraints.”

Notice what changes:

- The outcome is explicit.

- The constraints are explicit.

- The work becomes cross functional by default.

Now you can ask the right questions:

- What decisions will the system make versus suggest?

- Which steps must be deterministic?

- What evidence must be recorded?

When teams adopt this framing, roadmaps shrink. They also become far more honest.

Why “AI everywhere” strategies struggle

Some institutions try to “infuse AI everywhere.” It sounds bold.

In practice, it spreads ownership thin. You end up with dozens of AI components, each with partial responsibility, and no single team accountable for business results.

That is how you get busy roadmaps and weak impact.

What decision should an AI product be accountable for?

An AI product must be accountable for a specific business decision – approve, reject, escalate, or recommend – not a screen, workflow, or assistant experience.

In financial services, products are decision systems. Even simple journeys contain decisions about eligibility, fraud risk, escalation, fee waivers, or routing.

When AI is introduced, teams must choose whether the product owns a decision or merely assists a human. Many initiatives never make this choice, and that ambiguity becomes a scaling blocker.

A practical diagnostic for product teams

If you want to spot a weak AI initiative fast, ask three questions.

Question 1: What decision does this product own?

Not a screen. Not a feature. A decision.

Examples:

- Approve or reject a transaction

- Escalate or close an alert

- Approve, decline, or request more evidence

If you cannot name the decision, you do not have product clarity.

Question 2: What evidence must be true for that decision?

Financial services decisions are evidence bound.

Evidence might include:

- Transaction history

- Customer risk tier

- Policy rules

- Source documents

- Regulatory requirements

If the AI cannot link outputs to evidence, trust collapses.

Question 3: Who is accountable when it is wrong?

This is the question that exposes hidden politics. If the answer is “shared,” the initiative will stall.

In 2026, risk functions and regulators are asking for clearer accountability in AI assisted decisioning. That pressure is rising, not falling.

When AI products turn into operating models

AI products inevitably turn into operating models because AI changes how work is performed, not just how it is displayed. Once AI influences decisions, it reshapes roles, controls, escalation paths, and performance metrics.

Traditional digital products can often be reduced to screens and APIs. AI products cannot. They alter how tasks are performed and how authority flows through the organization.

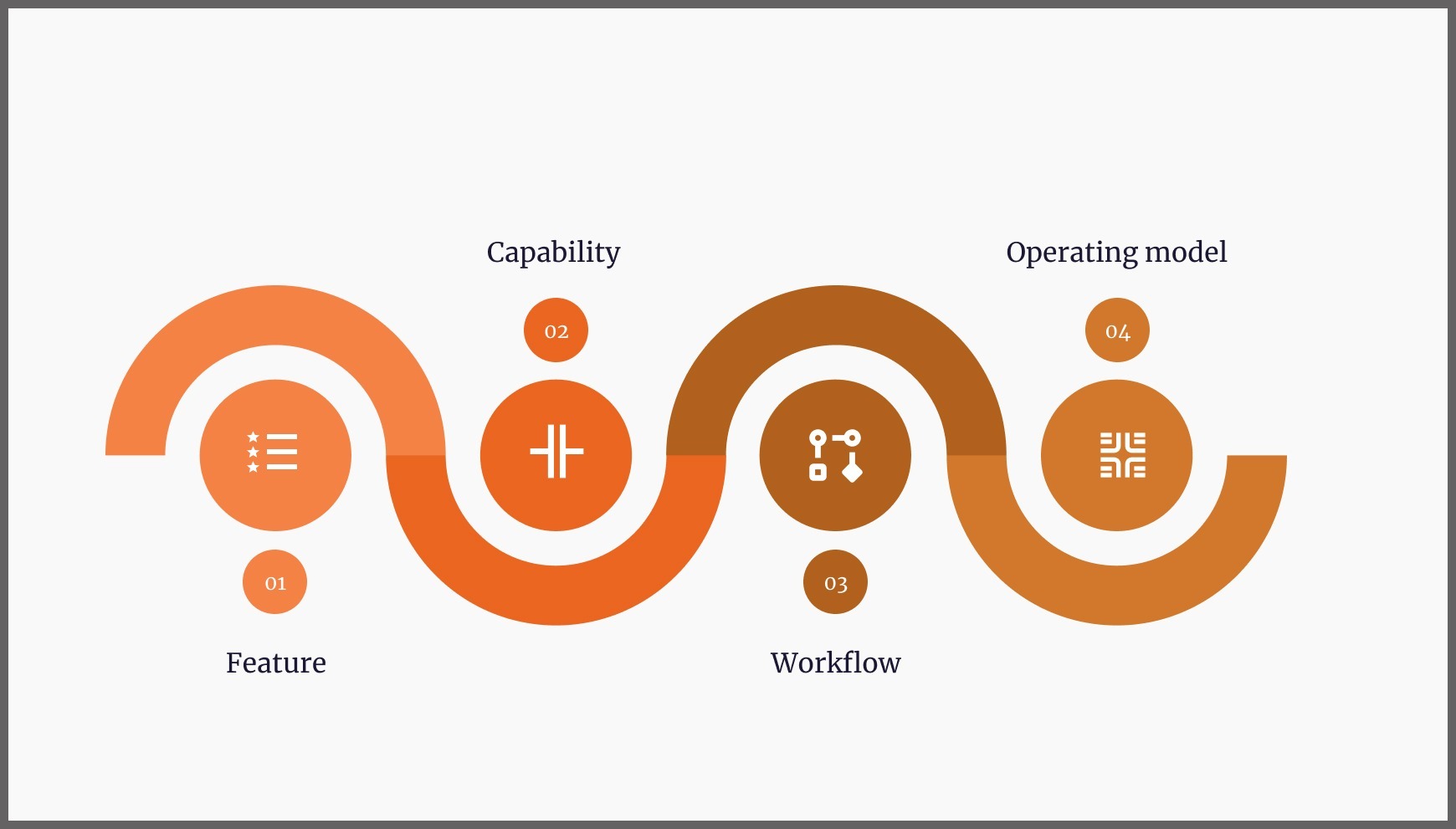

A Useful Shift model

Most teams start here:

1. Feature

Add a gen AI assistant to a tool

Better teams move here:

2. Capability

Improve the quality and speed of a task

Then the real scaling work begins:

3. Workflow

Redesign how a case moves from intake to resolution

And the mature form looks like this:

4. Operating model

Define roles, controls, escalations, and performance metrics around the AI supported workflow

Many roadmaps stop at step 1. Successful programs design step 4.

Agentic systems raise the stakes further. Agents plan, call tools, and execute across workflows.

That collapses boundaries between:

- Product

- Operations

- Risk

- Technology

Treated as plug-ins, they fail. Treated as new workers with authority, constraints, and supervision, they can scale. Agentic AI can plan steps, call tools, and execute tasks across a workflow.

Determinism versus Probability: Where, and why, must financial services systems remain deterministic

Any system of record (ledger entries, pricing, eligibility thresholds, or regulatory reporting) must remain deterministic because even small errors create financial, legal, and trust failures.

The ‘2 + 2’ problem

This is the part many roadmaps ignore. Financial services often run on systems of record. Those systems depend on deterministic math and deterministic rules. When they are wrong, the damage is not cosmetic. It is financial, legal, and reputational.

There are zones where probabilistic output is not acceptable, like:

- Ledger postings

- Interest calculations

- Pricing and fees

- Eligibility and credit policy thresholds

- Regulatory reporting numbers

- Limits and exposure calculations

In these areas, “almost right” is not a thing.

Where probabilistic reasoning can help

There are other zones where probabilistic output can be valuable, if bounded.

For examples:

- Summarizing case notes

- Drafting customer communications for review

- Suggesting investigation steps

- Extracting fields from messy documents, then validating

- Classifying requests with confidence thresholds

These are high leverage because they remove friction, not authority.

Balancing determinism and probability

Trying to make gen AI deterministic at the model level is the wrong fight. A better approach is to make the overall product deterministic where required.

The key move here is to split the system into deterministic and probabilistic layers:

- A deterministic core that owns calculation, rules, and final execution

- A probabilistic layer that supports humans and workflows with language and pattern recognition

The AI layer can suggest. The deterministic layer decides and records.

That separation is what keeps 2 plus 2 equal to 4.

How to get deterministic outcomes from probabilistic AI

If gen AI is probabilistic, how do we ship reliable AI products in financial services?

By separating the system into a deterministic core owns calculations, rules, execution, and records decisions, and a probabilistic layer that proposes, summarizes, and assists without final authority, you achieve this split successfully.

However, this separation ensures that two plus two always equals four, even when AI is involved.

Reliable AI products apply disciplined safeguards:

1. Constrain authority

AI proposes, deterministic services validate.

In most financial services contexts, gen AI should not be the execution engine.

A safe default pattern looks like this:

- AI proposes an action or conclusion

- Deterministic services validate against rules and data

- Humans approve where policy requires

- Systems execute

This keeps control in the right place. It also makes failure modes predictable.

2. Force structured outputs, not free form control signals

If your workflow depends on natural language alone, you will get surprises.

Use strict schemas. Examples of schema fields:

- Category label from an allowed list

- Confidence score

- Evidence references

- Recommended next step from a finite set

- Escalation flag

You can still show friendly language to the user. But you should not let free text drive execution.

3. Ground outputs in approved evidence

“Grounding” is often discussed like a model trick. In financial services, it is a product requirement. That means:

- The AI can only use approved knowledge sources

- The AI output must link to evidence internally

- The system must handle missing evidence by escalating, not guessing

This is where retrieval and knowledge controls matter. It is also where data governance stops being a background topic.

Add the missing piece: Deterministic evaluation and Regression testing

Many teams test AI like a demo. Financial services need a test harness that behaves more like software quality.

Include:

- Golden test sets for core scenarios

- Edge case libraries that grow over time

- Regression tests across prompt, model, and data changes

- Cost of error scoring, not only accuracy

- Stress tests for latency and volume

This is the difference between “it worked yesterday” and “it is dependable.”

4. Confidence-based routing as a first-class product behavior

Low confidence should not be hidden. It should trigger a predictable path:

- Human review

- Request for more evidence

- Fallback response

- Escalation to a specialist queue

5. Versioning for audit and reproducibility

If you cannot answer “what changed,” you cannot operate at scale. Strong AI products track include:

- Model version

- Prompt version

- Tool and retrieval configuration

- Data source versions

- Policy rules applied

Deterministic evaluation, regression testing, and versioning are operational hygiene, not bureaucracy. They are what make AI dependable rather than impressive once.

What does “governance as a product feature” actually mean?

In 2026, governance means controls are visible and usable inside the workflow (approvals, evidence panels, and confidence indicator), not buried in policies or post-hoc reviews

Governance is not separate from user experience; it is part of it. If governance is invisible, it will be bolted on later, and the product slows down or gets blocked.

A scalable AI product includes governance elements that users interact with. This can look like:

- An approval step for sensitive actions

- An explanation panel that shows evidence

- A confidence indicator that affects routing

- A “request review” action that creates an audit trail

- A clear escalation path when policy requires it

These are not add-ons. They are how the product stays alive in production.

Why hiding Governance inside “Compliance” fails

When governance lives in documents and committees only:

- Users experience surprises

- Engineers experience rework

- Risk teams experience uncertainty

- Leaders experience stalled rollouts

On the other hand, when governance is designed into the workflow:

- Adoption increases

- Exceptions become manageable

- Audit becomes possible

- Scaling becomes a normal path

To overcome AI complexities in financial services, read here about the governance frameworks you can use right away.

How to get your AI programs out of pilot limbo?

Pilot limbo is where many financial services AI efforts live. It looks like this:

- Many pilots

- Low measured P and L impact

- Repeated steering committee updates

- No scaled rollout

The fix is not “more pilots.” The fix is sharper product ownership.

Case in point

Situation

A financial institution ran more than ten genAI pilots across servicing, fraud operations, and credit. A few pilots looked promising:

- Call summaries reduced after call work

- Document extraction sped up intake

- Investigators produced better case notes

But nothing scaled. Risk raised consistency issues. Operations flagged rework. Product teams could not clearly name decision ownership or escalation paths.

Intervention

The institution made three moves:

- It reduced the initiative list and focused on two decisions with measurable cost and risk impact

- It designed governance into the workflow, including approvals and evidence links

- It separated deterministic core logic from probabilistic assistance

Outcome

Two products moved into daily operations. The biggest improvement was not model performance. It was product definition and operating readiness.

If you’re finding your AI initiatives stalling before they even begin, these 2026 trends will help you get out of AI pilot purgatory.

Metric upgrade to go from experimentation to operations

Pilots often use metrics like:

- Demo quality

- Time saved in a single task

- User feedback

Scaled products need operational metrics:

- Error cost, not only error rate

- Rework rate

- Exception volume

- Audit pass rate

- Decision turnaround time

- Drift signals over time

Once these metrics exist, roadmaps change. Teams stop shipping random features and start shipping controllable behaviors.

The ROI pressure

When leaders ask for ROI within a quarter or two, weak initiatives collapse. Strong product teams do three things:

- Kill low impact assistants

- Merge overlapping pilots

- Rescope toward decisions with clear value and clear control

This is how focus is built for impact.

To begin converting your AI products into revenue drivers in 2026, read up on these trends that redefine how financial services build AI products for measurable ROI.

What is the AI product leader’s real job in 2026?

The AI product leader must define decision boundaries, align value with control, and ensure AI systems are operable, auditable, and accountable from day one.

In 2026, product leadership in financial services is less about shipping and more about definition: definition of value, of responsibility, and of control.

The product leader must own:

1. A decision catalogue

Build a living list of business decisions. This turns “AI ideas” into a prioritized decision map.

For each decision, capture:

- Current process and systems

- Risk level and regulatory sensitivity

- Error cost and acceptable failure modes

- Where determinism is required

- Where probabilistic assistance is safe

2. Decision boundaries as product requirements

For every AI enabled decision, define:

- What is in scope

- What is out of scope

- What triggers escalation

- What evidence is required

- What approvals are mandatory

This is where accountability becomes real.

3. Governance as a visible product surface

Design controls people can use and make them part of the workflow, not a separate process.

Make them fast. Make them clear.

4. Operational readiness from day one

If you want scale, plan for operations early and include:

- Monitoring and alerting

- Runbooks for incidents

- Fallback modes

- Training for users and reviewers

- Change management for teams whose work will shift

5. Cross functional alignment

AI products force four groups to align:

- Design, to build trust and usability

- Engineering, to build reliable systems

- Data and AI, to build evaluation and monitoring

- Risk and compliance, to define safe boundaries

If any one group is “consulted late,” scaling slows. Product is the only function positioned to unify these threads around one product truth.

Rewriting your AI roadmap

A simple way to rewrite your AI Roadmap starting tomorrow looks like this:

Step 1: Rewrite every initiative as a decision statement

Instead of “AI assistant for agents,” write: “Improve the triage decision so that routine cases are resolved without escalation, while maintaining policy compliance.”

Step 2: Mark deterministic zones

For each initiative, label:

- Deterministic core steps

- Probabilistic assistance steps

- Escalation points

- Approval requirements

Step 3: Add the missing product components

Most roadmaps omit these:

- Evaluation harness

- Monitoring and drift management

- Audit and reproducibility

- User visible governance

- Operating model changes

If these are not planned, pilots will not scale.

Final Takeaway: AI can force product clarity or expose its absence

AI is not failing financial services. It is removing the cover that weak product definition once hid behind.

In 2026, models are good enough. Tooling is mature. Access is no longer the constraint. What AI is doing, often uncomfortably well, is forcing organisations to confront questions they could previously defer: Who owns the decision? What must be provably correct? Where is uncertainty acceptable? And who is accountable when it fails?

Roadmaps that treat AI as a feature factory will continue to look busy and feel disappointing. They will ship assistants, pilots, and incremental capabilities without ever changing outcomes. Roadmaps that treat AI as a decision system, designed around deterministic cores, bounded probabilistic layers, and visible governance, will look simpler, slower at first, and far more effective over time.

The institutions that succeed will not be the ones with the most AI initiatives. They will be the ones with the clearest answers to what their AI products are allowed to decide, what they are not, and how the business operates around those decisions every day.

AI does not reward ambition alone. It rewards clarity, and in financial services, clarity is no longer optional, it is the cost of scale.

FAQs

Why do AI initiatives in financial services struggle to scale?

AI initiatives struggle to scale because many are designed as pilots or features rather than decision systems. Scaling requires clear decision ownership, operating readiness, deterministic controls, and defined error handling, elements often missing from early AI efforts.

What is the difference between an AI feature and an AI product?

An AI feature enhances a task or interface. An AI product owns a business decision, operates within defined constraints, and is accountable for outcomes. In financial services, products must also support auditability, governance, and deterministic execution.

How can generative AI be used safely in regulated environments?

Generative AI can be used safely by separating it into a probabilistic layer that proposes or assists, while a deterministic core validates, executes, and records decisions. This design allows institutions to benefit from AI without sacrificing control.

Why do so many AI pilots fail to deliver business value?

Many AI pilots succeed technically but fail operationally. They are tested in isolation, without clear ownership of decisions, defined error costs, or production-ready governance. As a result, they cannot survive real-world complexity at scale.

How should I structure my AI product roadmap?

AI product roadmaps should be structured around business decisions rather than features. Each initiative should specify the decision it owns, the evidence required, where determinism is mandatory, where probabilistic assistance is safe, and how governance is enforced.